Many things could be true

in the future.

What we choose to attend to now is powerful.

And as captured as our attention already feels, the battle is reaching a fever pitch.

Painfully dysregulated, desperate for connection, and hooked on dopamine doom-scroll death spirals — what looks like a market opportunity for vagal nerve toning devices, LLM advertising platforms, and AI slop feeds is also a desperate scramble to extract all remaining value from the infrastructure of attention.

The race to the bottom of surveillance capitalism only has to last as long as AI companies even need users, revenue, and eyeballs to inspire investment. If it all looks a little sloppy, it might be because ad revenue is at this point mostly a flimsy veneer on AI companies’ evolving monetization strategy. “Senator, we sell ads” was fairly recently a revelation to US Congress members, but is also now mostly only a cover story.

As big tech companies’ willingness to deploy features that provide increasingly absurd and triggering content to users hints at, eyes on screen isn’t even the most salient metric anymore. OpenAI and Meta’s test balloons — feeds that will float the uncanny valley in front of anyone desperate enough to digitally dissociate — should be viewed in light of a tradition of targeting teens in moments of emotional distress: a banal cash-grab-by-manipulation more than a viable business model.

With companies conspiring to inflate the appearance of financial activity around AI, the narrative about what success looks like for these businesses has already functionally moved on from driving revenue through an improved advertising and attention feedback loop. Consumer approval won’t be required for AI companies’ future monetization plans, which include embedding computationally intensive processes everywhere until a few companies’ proprietary software is a core layer in all aspects of public and private service delivery, necessitating a massive and concentrated environmentally devastating global infrastructure.

What could this bad news for the Earth mean for community and connection online and off? Oxygen. Freedom. An opportunity for humanity. With major AI companies no longer even pretending to care about consumer approval and quality outputs, blatant enshittification drives massive logoffs. Every day, AI gives someone new the ick. People are reading and writing. They’re fucking and fighting. They’re making art and they’re singing in groups and they’re kicking balls, sweating and heaving. They're picking mushrooms and they're petting dogs. They always have. And they’re realizing AI isn’t that cool.

I’ve been wrong before. And the opposite could be true too— by this time next year we could have millions tuned into slop feeds, long-blink purchasing against increasingly absurd and sycophantic appeals to the altars of vanity and convenience, living in polyamorous pods with “agentic” companions. But it will be because people are hungry to find and make meaning.

“The algorithm knows what I want” is a satisfying sort of affirmation. I want, therefore I am. But AI is barely scratching the itch, and histamines are flooding to the surface. The feedback loop itself is an allergen.

For those eager to find new ways to outsource meaning making to an imagined machine overlord, there’s “spirit tech.” You can get a job in spirit tech; you can consult a chatbot trained by a guru; you can go to “AI summer camp” for “soul rooted” collaboration with LLMs. This makes sense, of course, since we're in the midst of a spiritualist economic bubble that's pinned GDP growth to a religious belief in transcendence of our earthly bodies via LLM.

We’re one more Marc Andreesen manifesto away from AI companies openly declaring a holy war on the world, so enjoy the forbidden fruit while it still tastes sort of like food, I guess.

xoxo, gossip girl

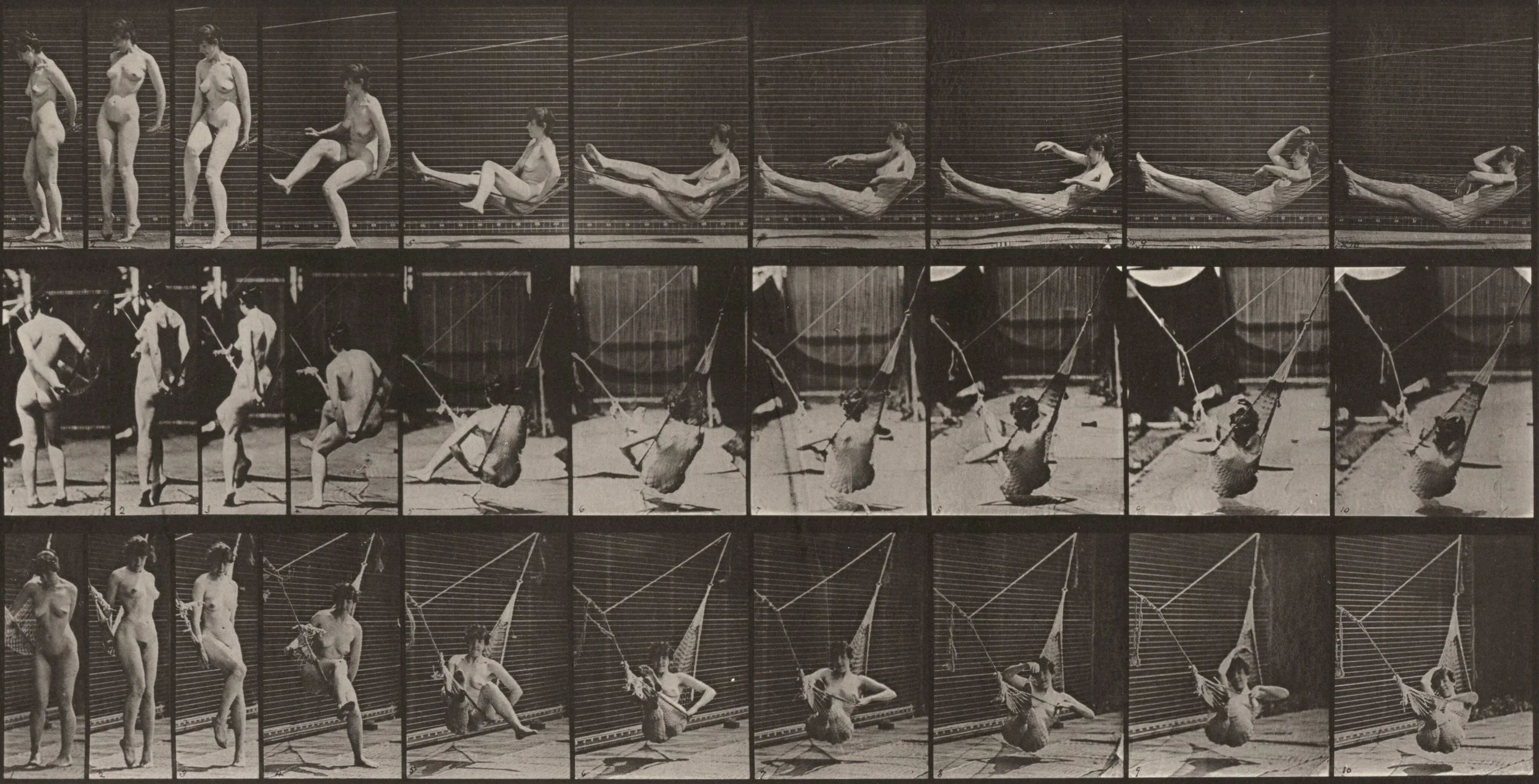

All photographs by © Eadweard Muybridge. 1. Plate Number 544. Artificially induced convulsions, lying down (1887). 2. Plate Number 261. Getting into hammock (1887). Via The National Gallery of Art, Washington DC.